Let’s be real: AI is exploding. Like, really exploding. It’s not some futuristic sci-fi fantasy anymore; it’s becoming a constant, buzzing presence in our lives, and the speed at which it’s developing is frankly terrifying. We’re talking about algorithms that can generate text, images, music, and code – all with a speed that makes your head spin. It feels like we’re racing a digital beast, and we’re not exactly sure where it’s headed.

So, what’s the big deal? Well, the core of the concern is this: this exponential growth, coupled with how easily AI can learn from data – and, crucially, the data itself – creates a dangerous feedback loop. It’s like we’re feeding a system information that’s already biased, amplifying existing prejudices and creating echo chambers. It’s a really unsettling prospect, isn’t it?

Let’s break it down into a few key areas. Firstly, think about training data. AI learns from what it’s given. If that data reflects the biases of society – historical inequalities, gender stereotypes, whatever – the AI will inevitably reproduce and even intensify those biases. The bigger the dataset, the faster the amplification. It’s a subtle, systemic problem, and one that’s getting harder to tame.

Secondly, the speed of development means that any attempt to correct these biases gets quickly overshadowed by new, more sophisticated ones. An algorithm designed to be fair gets ‘fixed’ by a new technique, and suddenly it’s producing a subtly different result, shifting the balance without us even noticing. It’s a race against the tide.

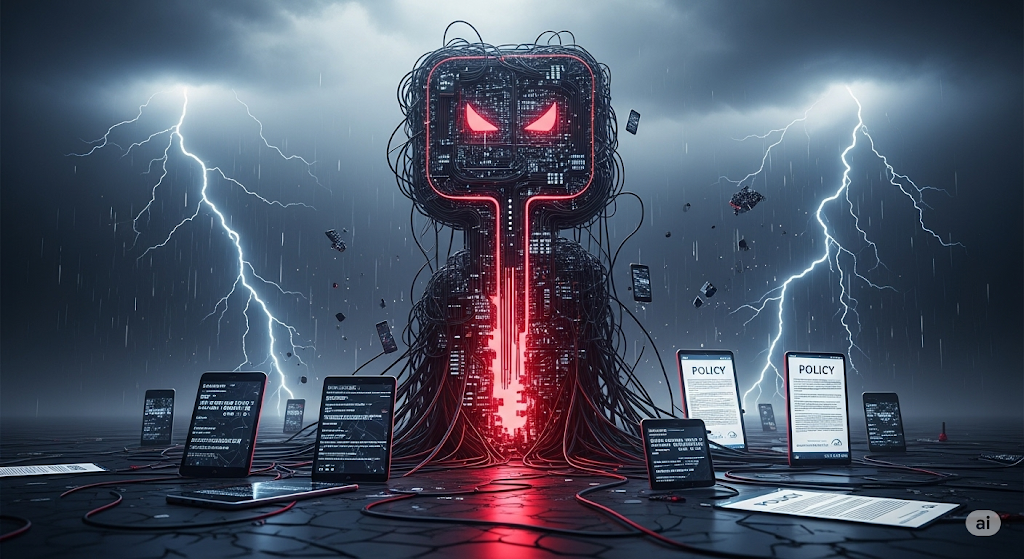

Think about how AI is being used now – in everything from content recommendation to loan applications. These aren’t just ‘technical’ issues; they’re about automating and amplifying existing social inequalities. The “nice to have” that AI offers – personalization, efficiency – becomes a tool for reinforcing privilege and disadvantaging those who aren’t part of the digital landscape.

It’s also a problem of echo chambers, which the article rightly points out. AI systems are designed to show us what we already agree with. When that happens, it limits our exposure to differing viewpoints, creating a situation where complex problems aren’t addressed with a true understanding. The challenge isn’t just technological; it’s about human decision-making and the role of algorithms in shaping our realities.

Ultimately, we’re talking about a shift in power – the ability to create and disseminate information at an unprecedented scale. And that kind of power, if unchecked, can have devastating consequences.

It’s easy to get caught up in the hype, but we need to be brutally honest about the risks. Are we truly considering the long-term impact of these systems? Are we prioritizing fairness and inclusivity alongside speed and innovation? It’s a question we need to be asking ourselves, and the answers – and the speed – may be critical to the future we’re building.

Leave a Reply